Machine learning topological phases

Valerio Peri

In previous posts we have seen how machine learning can tackle the task of classifying phases of matter. Both unsupervised and supervised learning performed well in the presence of a local order parameter [1,2,3]. Moreover, supervised learning could also learn phases of matter without an order parameter as the Ising gauge model [3]. However, to be successful one needs to resort to convolutional neural network and translational symmetry or make use of strong local constraints. In this week's post we focus on a new technique, presented in [4], that could simplify the task of phase classification when we lack of a local order parameter, as it is the case for topological matter. The main focus of this technique is to find suitable input data relevant for the phase under study.

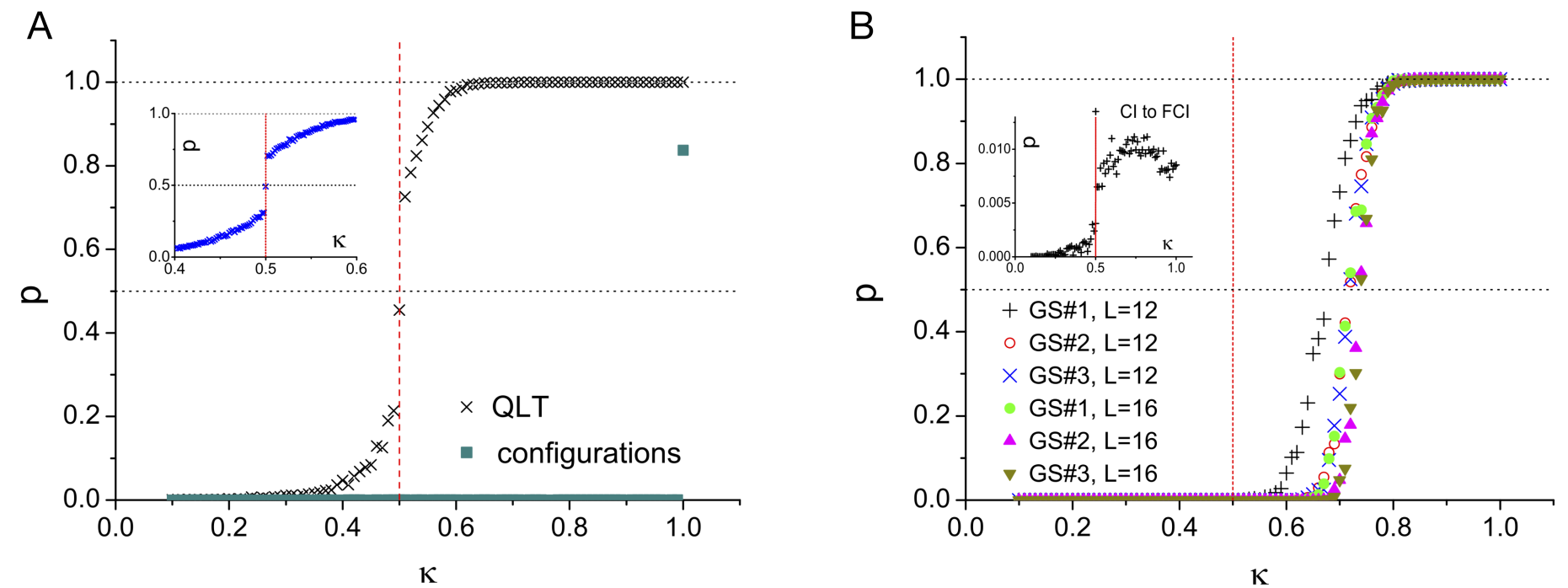

As a case study, the authors take a model, parametrized by k, that well suits the task we are after. In fact, its entire phase diagram is already known and displays a topological phase transition. The model describes a Chern insulator on a square lattice for k>0.5, while for k<0.5 it corresponds to decoupled two-leg ladders: a trivial phase. The neural network was initially trained with the most straightforward input: the occupation number of each lattice site for representative configurations of the two phases. As foreseeable, the network fails to learn anything, while it just memorizes the model on which it has been trained at k=1, as shown in the inset of Fig. 2a.

To successfully classify topological matter, we need to provide some non-local information as topological phases are usually characterized by non-local response functions. However, we would also like to avoid computationally costly calculations of Green's functions or entanglement spectra.

A useful hint comes from a different formulation by Kitaev [5] of the Hall conductivity, that we know characterizes the Chern insulator.

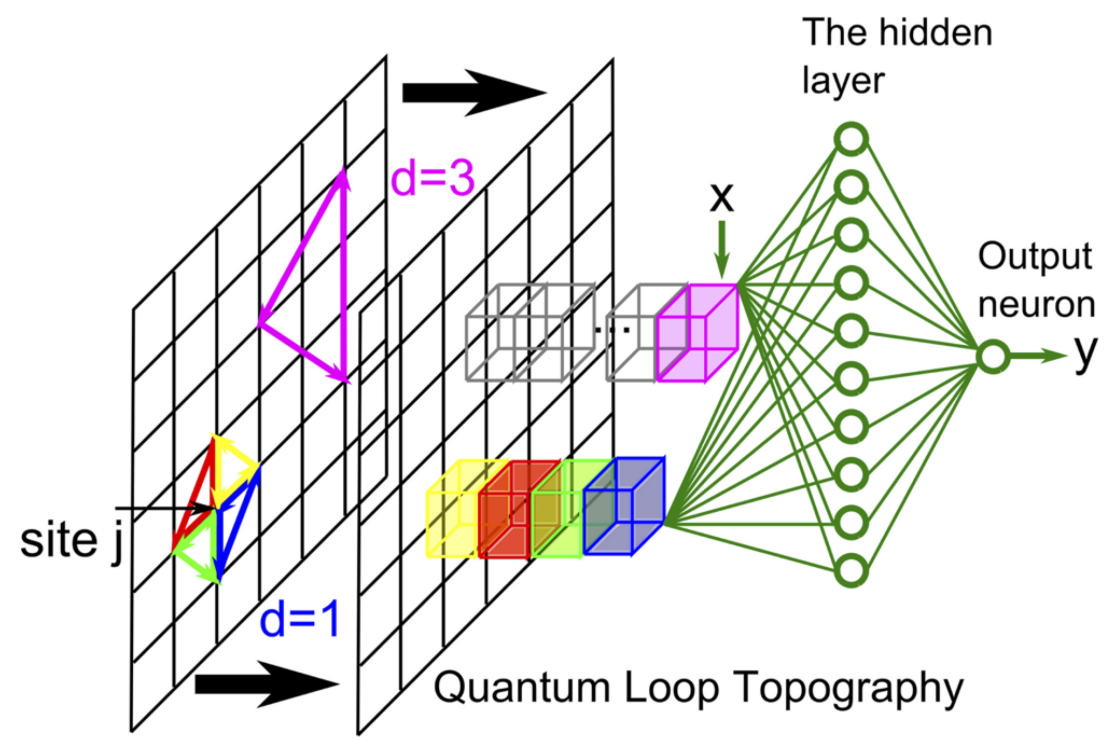

This response function can be written as the sum over products of two points correlation functions that form a triangular loop. Therefore, one could provide as an input to the neural network a vector for each lattice site containing all the possible triangular loops of non averaged two-points correlation functions that have a vertex on that lattice site. This is the procedure that the authors call "quantum loop topography" (QLT), schematically presented in Fig. 1 . In principle, the sum in Kitaev's formula extends over all the possible triangles. However, in presence of gapped systems away from the phase transition, the correlation lengths decay exponentially and one can set a cutoff on the maximum triangle size. The results presented in Fig. 2a show how a fully connected neural network successfully learns the phases of the model already with 10 hidden neurons and a maximum triangles size of 2.

How should we judge the performance of this approach? One could argue that the strucutre of the provided data was strongly based on the quantity that is known to characterize the phase under study, Kitaev's formula for the Chern number. Its power lies in the abilitity to generalize! The authors apply QLT to a fractional Chern insulator. They sample from the same variational wavefunction of the Chern insulator but elevated to the cubic power. In this case, the formula for the Hall conductivity provided by Kitaev does not hold. However, they are successful in classifying the two phases of the model, Fig. 2b. As expected, the neural network also captures the ground state degeneracy even when trained on a single ground state configuration.

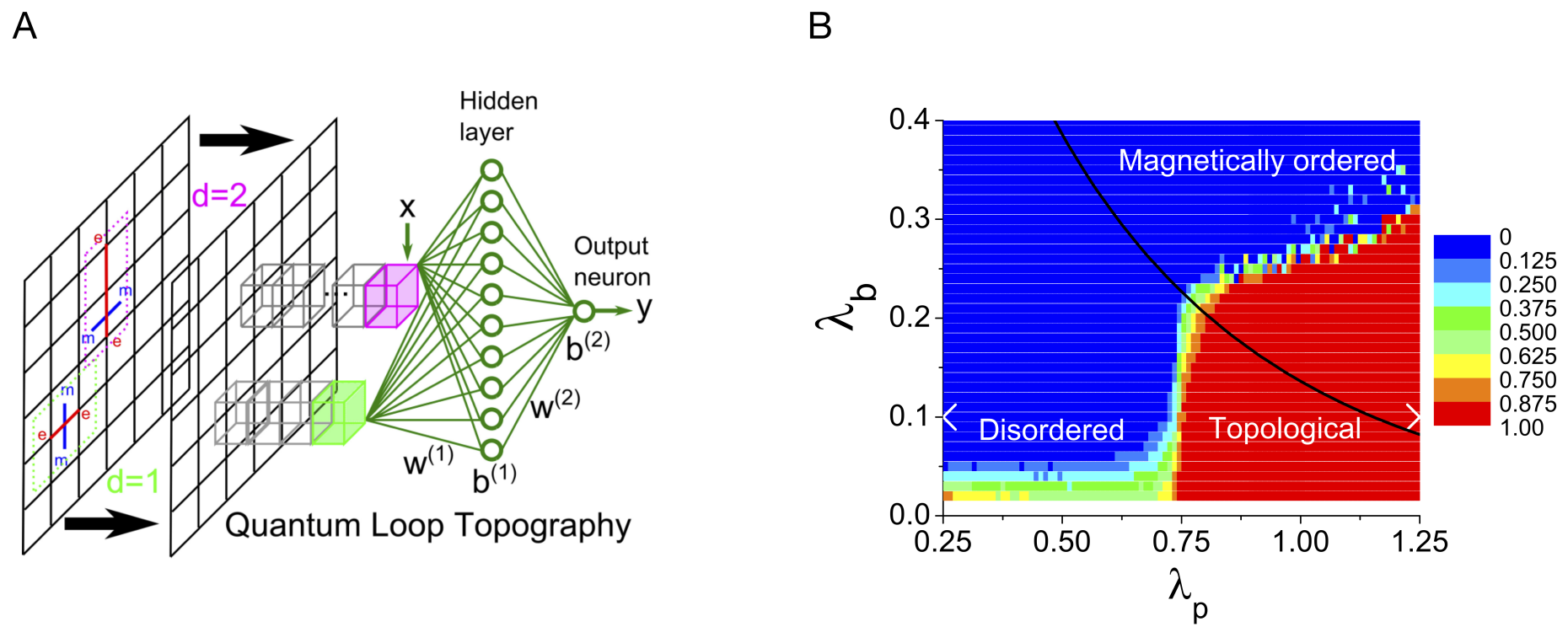

In a follow up paper [6], the authors further extend QLT to a non-chiral phase: the toric code in an external field [6]. Here they consider as input data loops of quasiparticles excitation as schematically shown in Fig. 3a. Again, by feeding the neural network with minimally non-local information that characterize the phase, the authors succeed in learning the phase diagram of the model under consideration, as shown in Fig. 3b.

We have seen how a simple fully-connected neural network can be trained to learn topological phases of matter with a little help from the human side. QLT provides a useful tool to interface response functions theory and machine learning for phase classification. Moreover, it is a fully real space technique that could be suitable to tackle disordered systems. However, there are few question that have not yet been addressed. It would be interesting to look at the weights of the neural network to see what it actually learns in the different cases. It would also be interesting to quantify how much knowledge of the phase under study is needed. How would the neural network perform if we feed it with just two point correlation functions instead of triangular loops? Answering these questions could provide a further advancement in the use of machine learning to tackle topological phases of matter.

- L. Wang, Phys. Rev. B, 94 195105 (2017) external page doi

- S.J. Wetzel, Phys. Rev. E, 96 022140 (2017) external page doi

- J. Carrasquilla and R. Melko, Nature Physics 13, 431 (2017) external page doi

- Y. Zhang and E.-A. Kim, Phys. Rev. Lett. 118, 216401 (2017) external page doi

- A. Kitaev, Ann. Phys. 321, 2 (2006) external page doi

- Y. Zhang et al., Phys. Rev. B 96, 245119. (2017) external page doi