Exact Wavefunctions with Deep Neural Networks

Frank Schindler

In a recent post, we saw how restricted Boltzmann machines (RBMs) can be used to encode some standard quantum many-body wavefunctions efficiently. In particular, efficient encoding here means that the number of parameters necessary to save a given state scales only polynomially with the physical size of the system considered. This avoids the exponential computational cost of manipulating the exact eigenstates of the corresponding many-body Hamiltonian. However, don’t get too excited! It was shown by Gao and Duan [1] that RBMs in fact cannot represent arbitrary physical states efficiently. That is, there are ground states of physically reasonable, local Hamiltonians, as well as states generated by time evolution with such Hamiltonians, which cannot be stored in a RBM without invoking a number of parameters that scales exponential with the system size. Note that this is not a contradiction to the well known result [2] that RBMs can model any statistical distribution arbitrarily well when we allow for an exponential number of parameters.

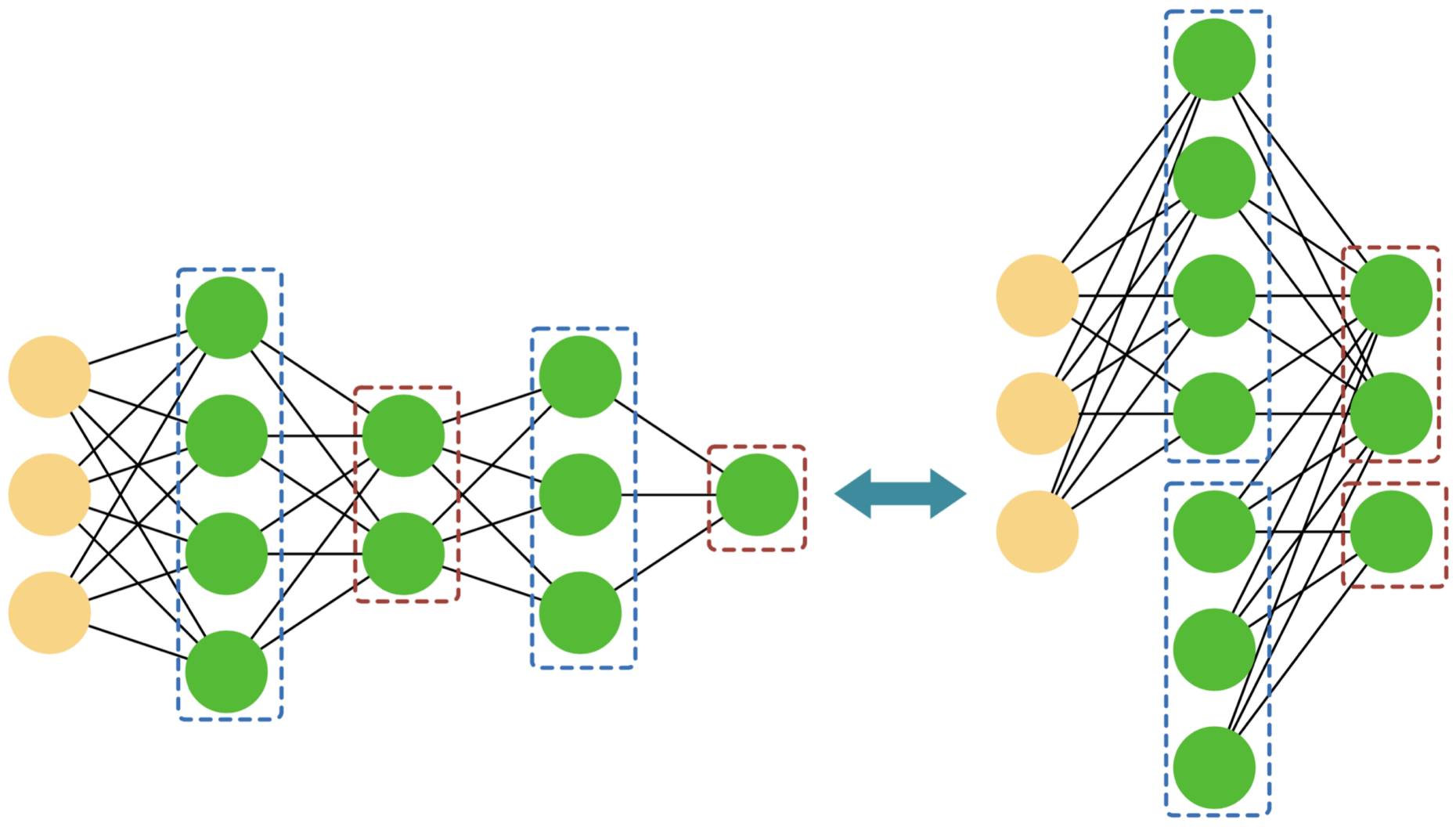

There is a way out though: If we allow for a second hidden layer, and thus employ a deep Boltzmann machine (DBM), we know from Ref. [1] that we can efficiently encode all reasonable physical states. Note that any number of additional hidden layers is equivalent to two hidden layers.

The state of a spin-1/2 system in the \(\sigma^z\)-basis, when encoded by a DBM, can therefore be written as\[\Psi_{\mathcal W}(\sigma^z)=\sum_{\{h,d\}} \exp\bigg[\sum_i \big(a_i\sigma_i^z + b_ih_i +b_i'd_i\big)\\

+\sum_{ij}\big( \sigma_i^z W_{ij}h_j + h_iW_{ij}' d_j \big)\bigg]\]

In this expression, \(h\) and \(d\) denote the spins of the first and second hidden layer, respectively, which come with their individual weights and biases. At first sight, we run into a problem when we want to optimize the parameters \(\mathcal{W}=(W,b,W',b')\) of a DBM so as to best approximate a given ground state: Remember that for a RBM, one can evaluate the summation over all hidden spins, thus determining the form of the corresponding wavefunction analytically. This is however not anymore possible for a DBM, making the task of tracing back changes in the parameters to changes in the energy costly, and therefore also the task of training a DBM.

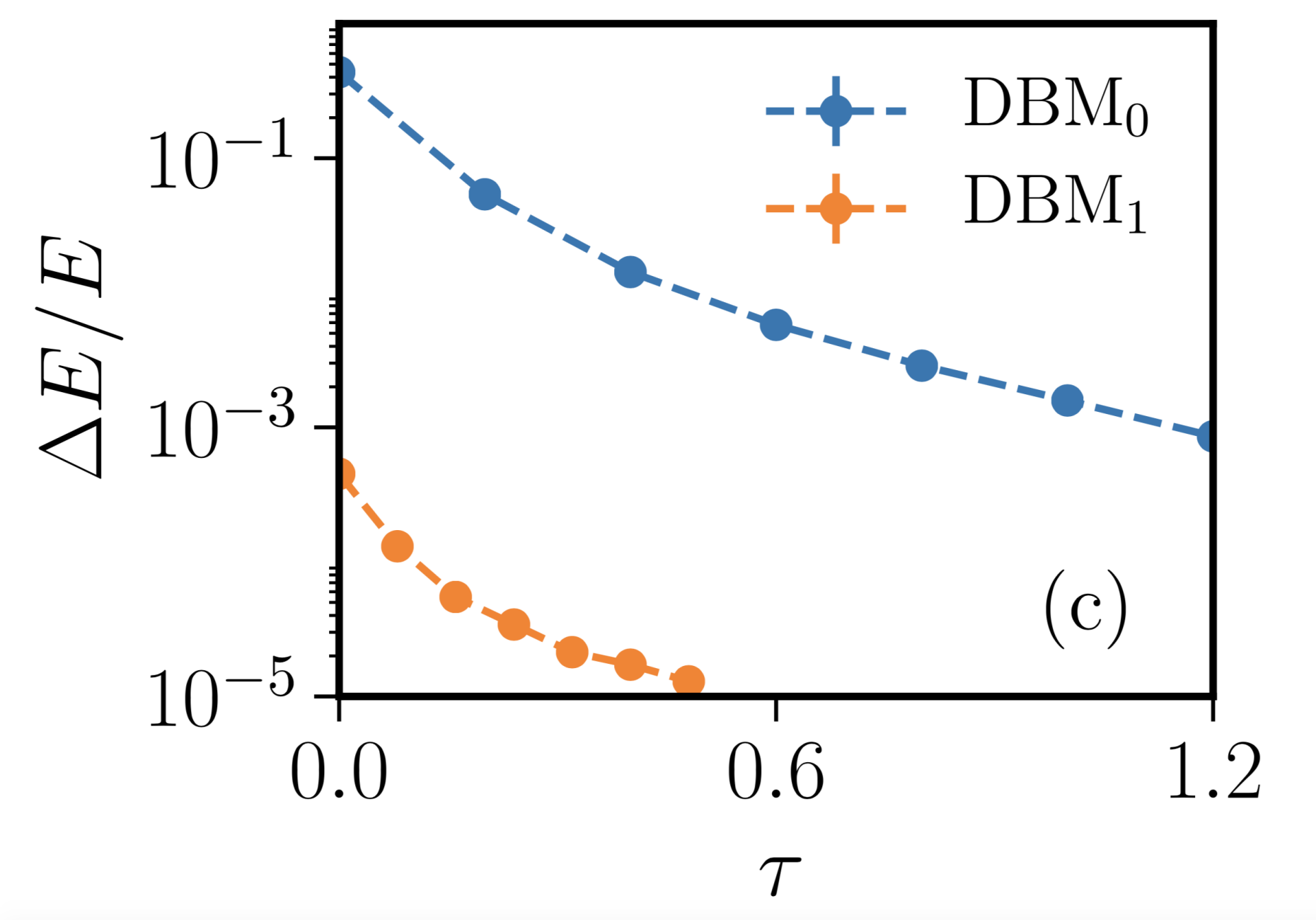

Carleo, Nomura and Imada [3] show how to avoid this problem: They provide a prescription to efficiently optimize the parameters of a DBM for two paradigmatic one-dimensional spin-1/2 models of many-body physics, the transverse-field Ising model and the Heisenberg model. Their idea is to perform an exact imaginary-time evolution in the parameter space of DBMs instead of just employing them as variational wavefunctions. Remember that any ground state of a gapped Hamiltonian \(H\) can be written as the infinite-time limit of the imaginary-time evolved state \(|\Psi(\tau)\rangle = e^{-\tau H}|\Psi(0)\rangle\), where \(|\Psi(0)\rangle \) denotes an arbitrary state that has non-zero overlap with the true ground state. When we make the ansatz \[\langle \sigma_1^z\cdots \sigma_N^z|\Psi(\tau)\rangle = \Psi_{\mathcal W}(\sigma^z)\] we can map this time evolution to an evolution of the DBM parameters \({\mathcal W}(\tau)\). Ref. [3] then proceeds to show how such an exact mapping can be achieved in the case of the two models mentioned above. This algorithm therefore allows to encode the eigenstates of the respective model faithfully at every step of the imaginary-time evolution, without the need for a variational optimization of the DBM parameters. To achieve this, it turns out to be necessary to add a number of hidden neurons to the DBM at every timestep that is proportional to the system size. The total number of DBM parameters is therefore linear in the elapsed imaginary time, as well as in the system size. Note that the only discrepancy of the so obtained DBM state with respect to the true ground state comes from the fact that \(\tau\) is finite.

There are a few open questions that are left unanswered by Ref. [3]. While the authors provide an efficient algorithm to arrive at the ground states of the one-dimensional transverse-field Ising and Heisenberg models, it remains unclear whether we can find an exact representation of the imaginary-time evolution in terms of DBM parameters for all physically relevant models, especially in higher dimensions. There is also no attempt made of a comparison of this method with the established matrix-product state algorithms that perform imaginary-time evolution efficiently in one dimension. It thus remains an open question whether the DBM based imaginary-time evolution presented here will contribute to the understanding of those problems of many-body physics that have so far also eluded the conventional methods of the field.

- Gao, Xun, and Lu-Ming Duan. "Efficient representation of quantum many-body states with deep neural networks." Nature communications 8.1 (2017): 662. external page doi

- Le Roux, Nicolas, and Yoshua Bengio. "Representational power of restricted Boltzmann machines and deep belief networks." Neural computation 20.6 (2008): 1631-1649. external page doi

- Carleo, Giuseppe, Yusuke Nomura, and Masatoshi Imada. "Constructing exact representations of quantum many-body systems with deep neural networks." external page arXiv:1802.09558 (2018).